When Robots Learn Emotions: The Relationships of the Future

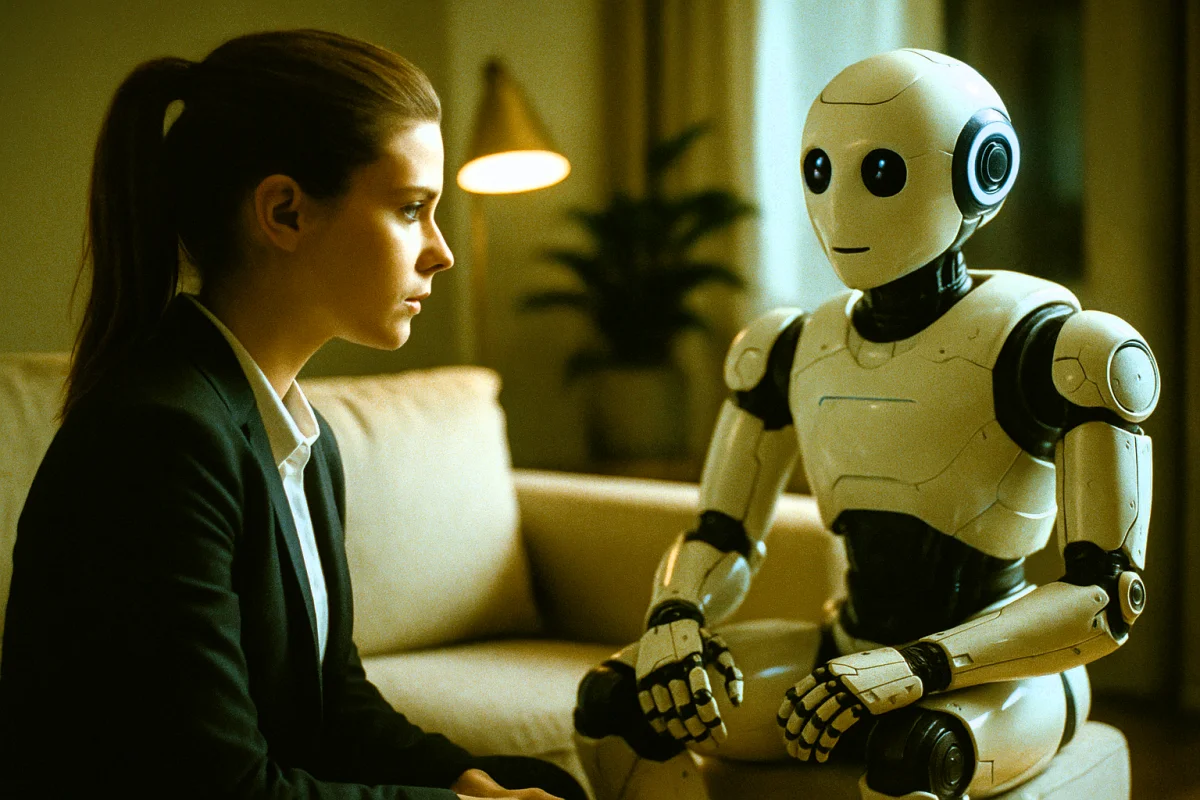

Artificial Intelligence (AI) is no longer just a digital tool — it is gradually learning to recognize and express emotions. But what does this mean for our daily lives? Can we imagine a world where robots not only complete tasks but also understand human feelings?

How Robots Learn Emotions

Modern AI systems use facial expression analysis, tone of voice, and emotional nuances in text to determine how a person is feeling. This technology is already being applied in healthcare, customer service, and education.

The Benefits

If robots gain the ability to understand our emotions, they could become empathetic assistants — helping lonely individuals, improving communication, and reducing stress in work environments.

Risks and Challenges

Despite these possibilities, there are risks. Simulating emotions does not mean truly experiencing them. People may grow overly attached to robots, leading to social isolation or dependency on technology. There is also an ethical question: is it right for machines to "feel"?

The Future Outlook

Experts believe that emotional collaboration between humans and robots is inevitable. The key question is how we will draw the line between imitation and genuine emotion.

Conclusion

Robots that learn emotions may become companions in our future. But the final decision rests with us — whether we use them as supportive tools or allow them to become an inseparable part of our relationships.

👉 What do you think — should robots have the ability to "learn" emotions?

✍ Article Author

- Registered: 26 July 2025, 15:34

Silent Cat 🐾

Silent Cat 🐾